My last several posts have looked at the use of voice-enabled bots – aka voicebots – for use in Interactive Voice Response (IVR) and other telephony applications. This post is a review of another voicebot telephony connectivity option - the Voice.AI Gateway from AudioCodes. If you want to connect a voicebot to a phone network, then you need an RTC-Bot Gateway. AudioCodes was one of the first VoIP gateway infrastructure vendors more than 20 years ago and the Voice.AI gateway continues this gateway tradition. Instead of connecting disparate network technologies, this time they are connecting to various AI platforms for speech processing and bot interaction.

Voice.AI Gateway Approach

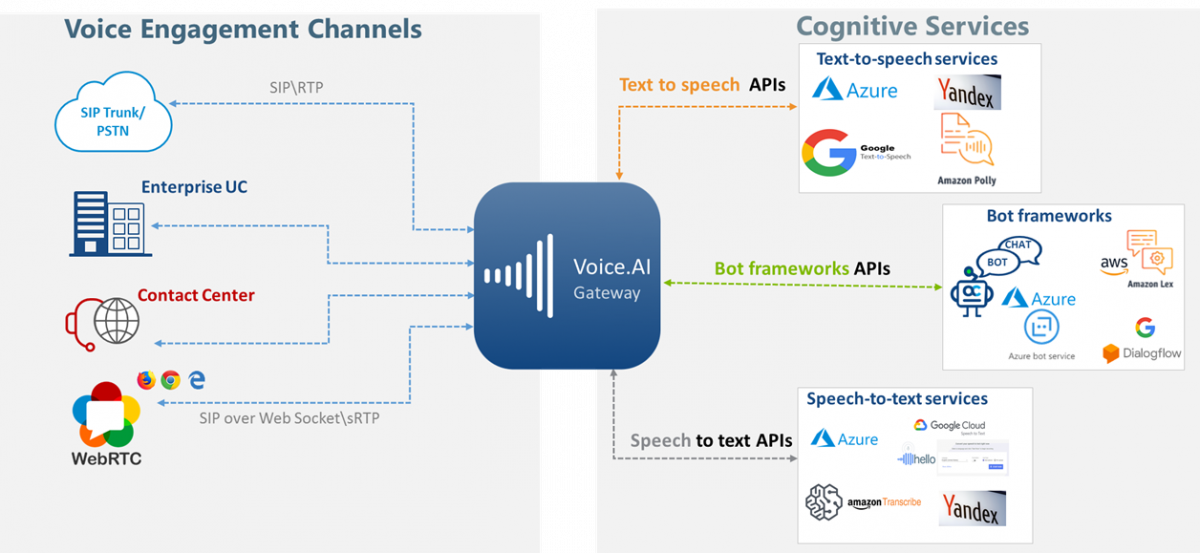

The AudioCodes Voice.AI gateway has some unique qualities compared to other RTC-bot gateway solutions on the market. You can see some of the differences in the marketing image from their product page below:

Diagram provided by AudioCodes. cogint.ai verified Azure Text-to-Speech, Google Text-to-Speech, Dialogflow, and AWS Polly in this review.

Notably, it is offered as a managed service that is designed to connect to a wide variety of existing telephony networks and interfaces with speech and bot APIs from various cloud providers.

Telephony Infrastructure

AudioCodes Voice.AI Gateway is based on its Session Border Controller (SBC) product. If you haven’t dealt with VoIP infrastructure that’s probably a new term. Session Border Controllers act as traffic controllers and transformers for VoIP traffic. Prior to some of the technologies that have now become standard with WebRTC, they also helped to deal with some of the firewall and NAT traversal issues and still do for most SIP networks. They help to mediate signaling differences, even within a standard protocol like SIP, and help with media conversion where needed. Since SBCs are essentially a gateway device, it is somewhat natural to expand on the number of things that can be connected to the gateway. This is what AudioCodes has done with AI-based speech and bot services.

The SBC-core of the Voice.AI gateway means it has many telephony connectivity options for SIP networks and some WebRTC-based devices. It can be used to connect with older Enterprise and Contact Center SIP infrastructure in addition to modern SIP trunks for inbound and outbound calling. I am not sure if this works in conjunction with the AI capabilities, but SBC typically have excellent high availability options, with the ability to keep a call connected even in the face of a catastrophic software failure.

Managed Service

Unlike all the other RTC-Bot gateways I have reviewed, the Voice.AI Gateway is a managed service. This means AudioCodes handles all the setup, configuration, and infrastructure maintenance. If you want to make an adjustment, you need to ask AudioCodes to do it. This could be good or bad depending on your preferences. If you aren’t sure what you are doing or don’t have the staff to maintain a gateway, AudioCodes makes things easy. However, if you are type of organization that likes to tinker and improve yourself, then you’ll have to live with interacting with another party for your project with minimal documentation and tools to see what is happening behind their blackbox.

AudioCodes prefers to run their managed service in Azure though they support AWS too. They say they can also deploy this in a customer’s own cloud environment, which may be required in sensitive applications where routing customer outside of the enterprise’s direct control is not allowed due to privacy concerns or regulatory requirements.

Multi-platform

The Voice.AI gateway connects to many difference speech and bot services. AudioCodes has stated this includes:

| Speech to Text providers | Text to Speech providers | Bot engines |

|---|---|---|

|

|

|

Why so many services? Certainly, an existing Azure Bot Service environment would need expect support for Azure’s transcription and Text to Speech (TTS) synthesis services. The same is true for Dialogflow. The additional Text to Speech (TTS) providers can help to give a bot a more distinctive voice. Beyond that, it is also possible to mix and match STT and TTS engines. This might make the most sense to do if someone had a Dialogflow bot but wanted to use something like Azure’s Custom Voice service that lets developers design their own unique synthesized voice. I don’t expect we will see too many mixed-platform voicebots for reasons we will touch on in the review, but there are certainly scenarios where it could make sense.

Pricing

AudioCodes has 2 mutually exclusive pricing options for its Voice.AI Gateways – per minute and per concurrent-session. I describe the pricing they shared with me below.

Per-minute option

The first model is priced on a per-minute basis:

| Minutes per Month | Price per Minute |

|---|---|

| 10,000 to 500,000 | $0.018 |

| 500,00 to 1,000,000 | $0.015 |

AudioCodes has a 10,000 minutes / mo minimum charge and a 12 month commitment which comes to $180/mo and a total commitment of $2160 for a year.

Per-session option

They also have a per-session option based on based on a maximum number of concurrent sessions per configuration:

| Sessions | Monthly price per session |

|---|---|

| 5-10 | $105 |

| 11-50 | $89 |

| 51-100 | $79 |

| 101-500 | $71 |

To use this option, you need to commit to at least 12 months of service at those levels with a minimum of 5 sessions a month - $525/mo for a total $6300 1-year commitment.

You will also need to cover the cost of transcription and speech synthesis, as well as the bot on top of this. The math to figure out the cost for a given scenario us going to vary significantly based on the voicebot and user interacton behavior. A chatty bot will incure more TTS charges. A chatty customer will incure more STT. As a lower volume usage example, let’s assume our average voicebot interaction lasts 3 minutes, 40% of which is the customer, 50% is the bot, and 10% is silence and we will handle 10,000 calls a month. Using Azure’s Speech to Text (STT) and AWS Polly for Speech to Text (STT), this comes to 3.36 cents a minute. The SIP trunk connectivity will usually run a quarter cent to a cent a minute, but that puts the Voice.AI Gateway price well below Dialogflow’s Phone Gateway 5 cents per minute pricing for its Enterprise Essentials package.

| Service Charge | Unit Price | Unit | Units | Total |

|---|---|---|---|---|

| AudioCodes (Less than 500,000) | $ 0.0180 | /minute | 30,000 | 540.00 |

| Azure Speech-to-Text, standard | $ 0.0167 | /minute | 12,000 | 200.00 |

| Dialogflow Text Charges | $ 0.0020 | /minute | 12,000 | 24.00 |

| AWS Polly TTS, neural voice | $ 0.0115 | /minute | 15,000 | 172.94 |

| --------------------------------------------- | ------------- | ----------- | ---------- | ---------- |

| Total | $ 0.0312 | /minute | 30,000 | $ 1,006.94 |

Product Review

Setup & Methodology

To provide a fair comparison with my other reviews, I decided to stick with the existing Dialogflow bot I used for the Voximplant and SignalWire posts. To connect to the bot, I exported the bot’s service account JSON from Google Cloud and sent it to the team at AudioCodes.

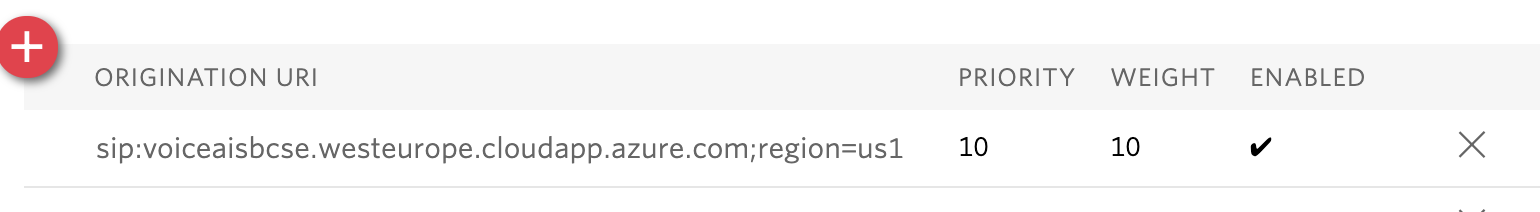

To show how I could control my own connectivity, I made a SIP trunk using Twilio’s Elastic SIP trunk service. AudioCodes gave me a SIP URI and I plugged this into the Original setup inside Twilio:

Then I assigned a phone number to this trunk. I shared that phone number along with Twilio’s published list of IP addresses for the AudioCodes team to whitelist. The next day they had it ready and I was able to verify the bot connected.

Since the Voice.AI Gateway is offered as a managed service, I don’t have much other setup or code to share beyond the few tidbits inside Dialogflow below since AudioCodes took care of everything else.

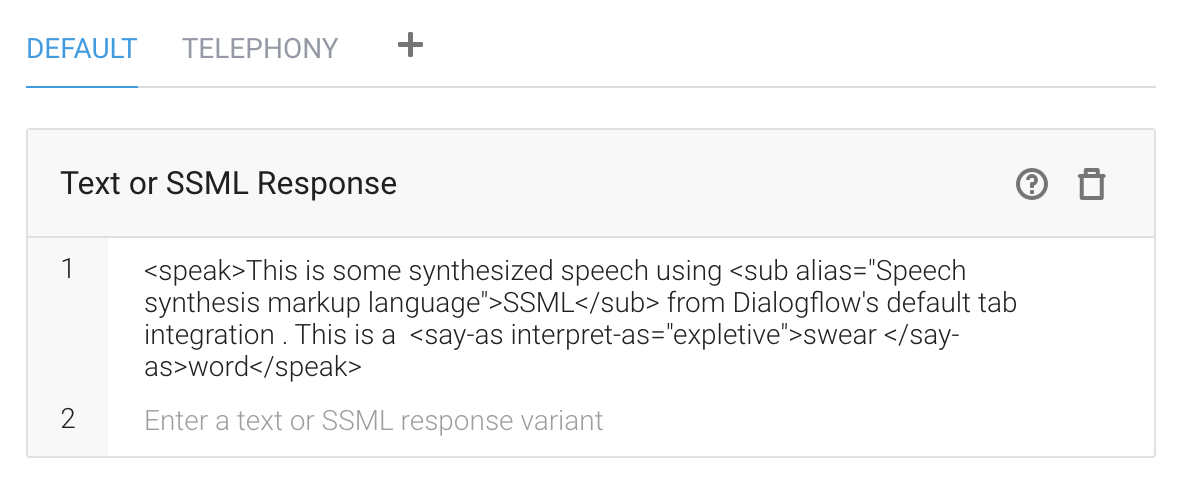

Speech to Text (STT)

The Voice.AI Gateway performs its own transcription with the chosen transcription engine before passing along that transcribed text to the bot. My final tests used Google Cloud Text-to-Speech.

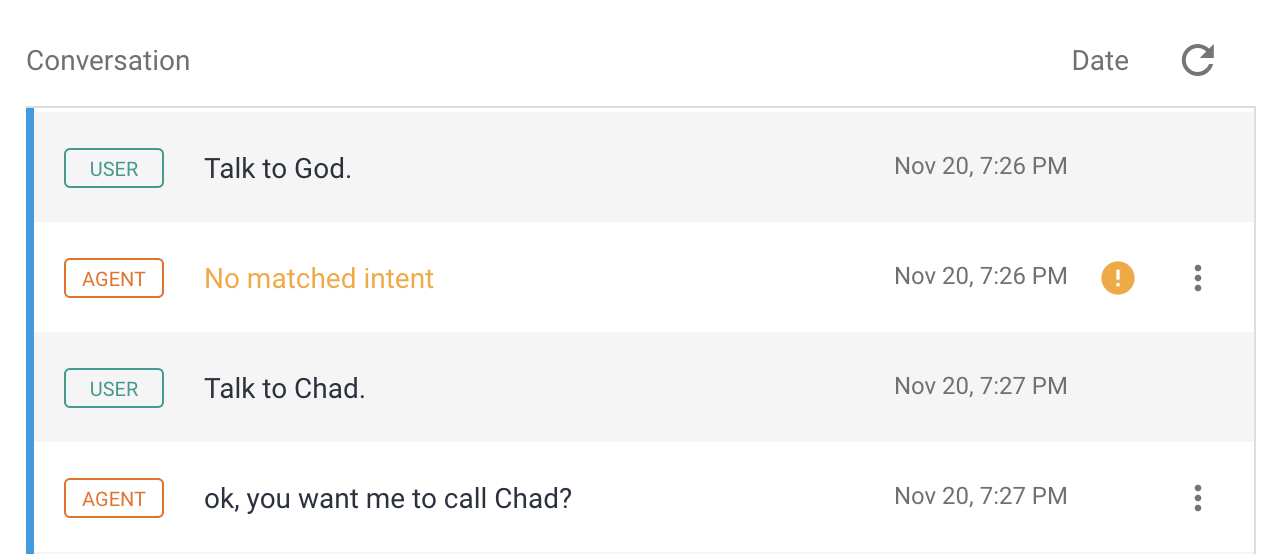

One of my intents is “Talk to Chad” to initiate a call transfer. In my testing, I noticed with the transcription consistently would not capture my name properly. I never had this problem when Dialogflow did the transcription natively (vs. converting it to text first and sending it to Dialogflow that way was was the case here).

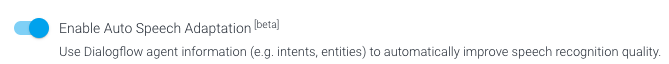

It is hard to tell if perhaps the Google STT was not performing well or not configured optimally. Dialogflow does some automatic optimizations to its own STT to help better match vocal utterances to intents with its Auto Speech Adaptation feature:

AudioCodes did say they just added an option to define words to help the transcription engine better match the input for Google’s Speech-to-Text service, like this:

{

"sttContextPhrases": [

"Chad"

],

"sttContextBoost": 10

}

They say this could be included as an input parameter as I will cover in a bit.

Text to Speech (TTS)

Since the Voice.AI gateway supports voice generation I was eager to try some different voices. I decided to go with AWS Polly’s Emma for speech synthesis. Emma has an option for one of AWS’s higher-quality neural models. I also wanted to test synthesis in a different language variant than the bot was set to – en-GB in this case.

I verified SSML playback worked fine. Polly Lexicons for customizing pronunciation are not supported.

It would have been fun to experiment with Azure’s Custom Voice to make my own unique voice, but that was well beyond the scope of this review.

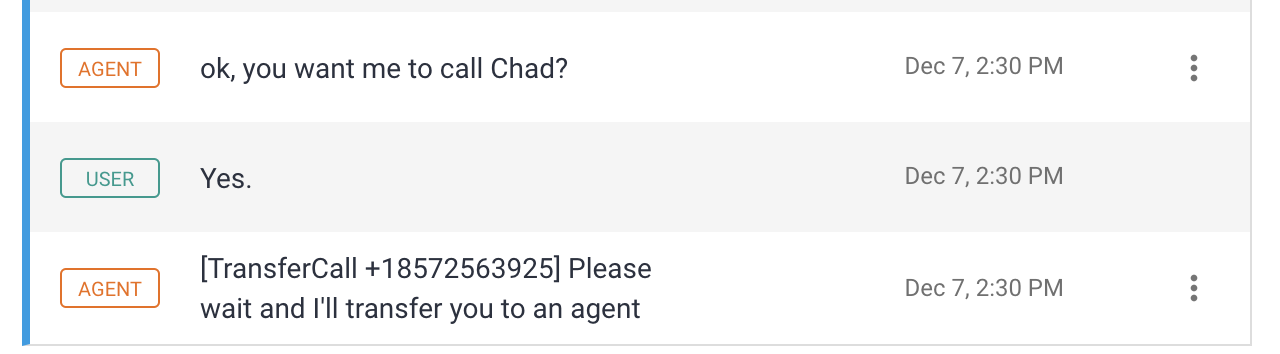

Call Transfer

The Voice.AI Gateway does not support Dialogflow’s Telephony Tab, but it is easy to initiate a call transfer by with a TransferCall string followed by the number surrounded in brackets as can be seen below.

This actually has the advantage that you can say set it to something immediately before the transfer right from the GUI without having to do anything fancy with follow-up intents or fulfillment.

Recording

The Voice.AI gateway does not record directly itself, but it does support the SIPREC standard for sending recordings to third party recording systems. SIPREC is a SIP-based specification that essentially forks calls and sends the media to an external recording system. This approach is very common in large enterprises and call centers who often have sophisticated recording systems for doing analysis and storage for regulatory compliance. I did not verify this feature, but it is common in SBCs so I doubt there would be any issues.

Playback Interruption

The Voice.AI gateway does have barge-in capabilities. In testing I had to tell the AudioCodes team how long I should let a prompt play by default before starting STT again, letting the user barge-in.

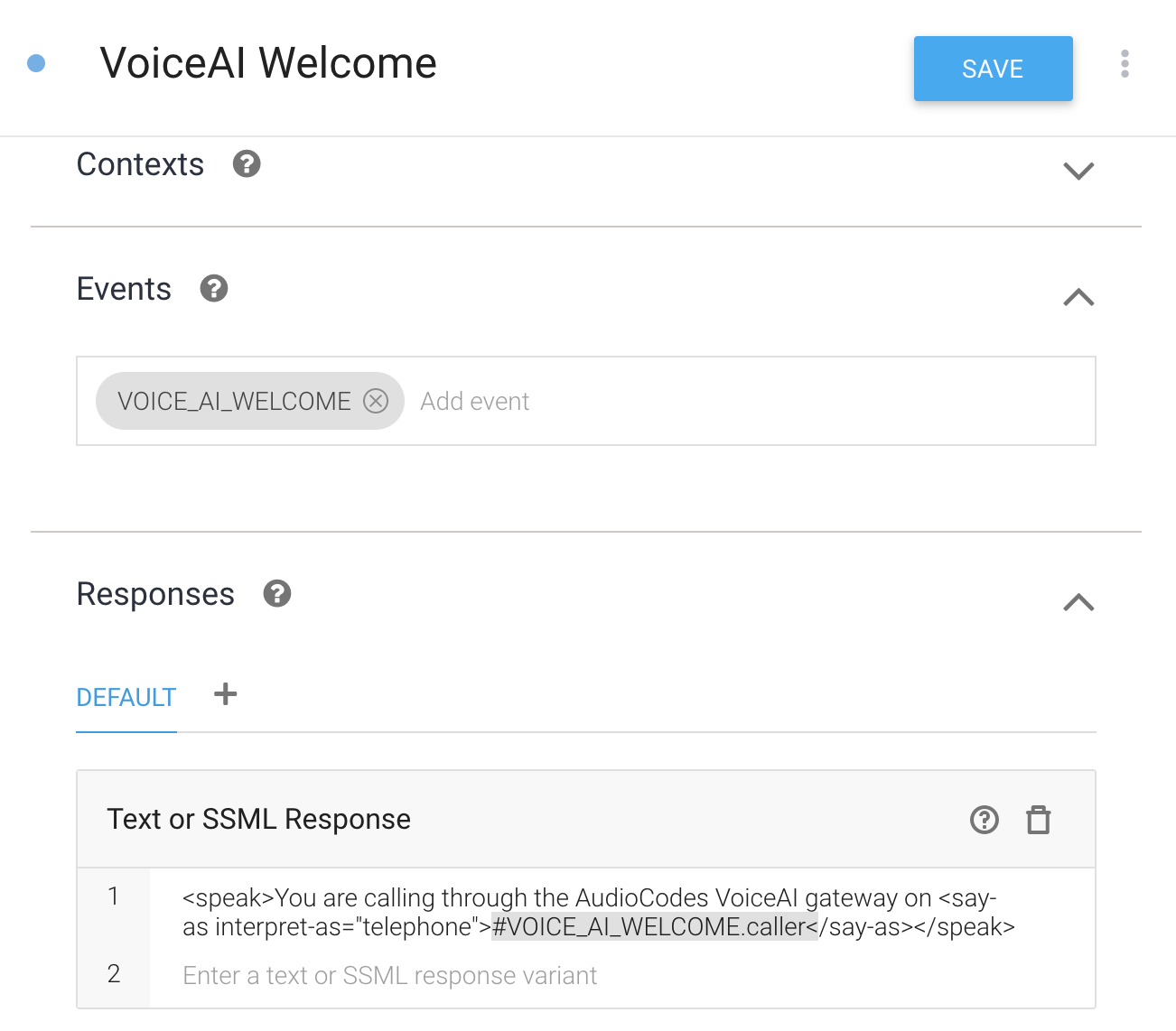

Events

Welcome

When a new call comes in, the Voice.AI gateway sends a VOICE_AI_WELCOME event. That can be used like the default WELCOME event or Dialogflow’s Phone Gateway TELEPHONY_WELCOME. This event also includes a parameter with the calling phone number that can be accessed with #VOICE_AI_WELCOME.caller.

Looking at the logs in Stackdriver, shows the called number and SIP URL are also included as shown in the textPayload object below:

textPayload: "Dialogflow Request : {"session":"95f08c1f-42ae-40f4-9ef0-f84e7c0c351b","query_input": {

"event": {

"name": "VOICE_AI_WELCOME",

"parameters": {

"calleeHost": "voiceaisbcse.westeurope.cloudapp.azure.com",

"callee": "+16172076328",

"callerHost": "cogintai-audiocodes.pstn.twilio.com",

"caller": "+16173145968"

}

}

},

"timezone":"America/New_York"}"

DTMF Detection

The Gateway will detect DTMF digit entry and send a DTMF event with a single DTMF digit in the digits parameter.

In the course of my discussions with AudioCodes, they mentioned they added some options to pass custom payload parameters to control various details. This includes setting the ASR engine to be continuous or to give it a timeout.

Control Parameters

I ran out of time to verify this and did not get any documentation, but the AudioCodes team said they added some the following parameters that can be passed with the intent:

speechLanguagevoiceNamecontinuousASR– True/False, to always listen for spoken inputcontinuousASRTimeoutInMS- to control barge in

These would need to be set in code as part of fulfillment or passed as a custom payload via the GUI. One can see how this would enable switching of voices, languages, and control of how and when you should let users speak over your bot’s speech output.

Synopsis

AudioCodes support for Dialogflow matured quite a bit in the couple of months I was interacting with them off-and-on for this post. It was apparent the system was originally architected around Azure. Azure Bot Service development is much more code oriented vs. Dialogflow’s more typical GUI-centric method. Of course one can control a Dialogflow bot without the GUI, but this becomes one other thing that needs to be maintained without the benefit of the GUI's guidance and tools. That is certainly not much work at all for an experience Bot developer but does mean more work for a Dialogflow novice that wants to keep things in the GUI as much as possible. Little things like hanging up when the call is done are still missing in the Voice.AI gateway, but I am sure will come soon.

Beyond that, the ability to mix platforms is nice but I wouldn’t recommend doing that if it can be avoided. Building a great voicebot is usually hard enough without having to worry about additional interaction dependencies. Perhaps there are some exceptions if a specific synthesized voice has been chosen as a corporate brand and the IVR team decides to use a different underlying bot platform or if a specific STT engine has already been tuned for one environment. A larger enterprise or call center may also have some premise-based transcription infrastructure in place they want to reuse. However, with few exceptions like Microsoft premise-based version of its bot framework, in most cases sensitive data will make its way to the cloud one way or another so it is better to organize around modern cloud best practices. Most of the time companies choose one provider – Azure, AWS, or Google and stick with them for everything. Voicebot development is certainly easier this way. Furthermore, the bots themselves are doing more to optimize for speech input to reduce latency (unless you do STT on device) and improve performance. Dialogflow and Amazon Lex bot accept direct audio input. Dialogflow includes speech output too. It seems AudioCodes is moving in the direction of using bot-platform integrated speech services for Dialogflow at least.

Where I see the Voice.AI gateway really shining is in more traditional call center environments. These often have a lot of premise-based equipment, perhaps with some enterprise-controlled elements in the cloud. This audience often would not have dedicated staff to manage an RTC-Bot gateway. They would prefer to pay to have a neck to wring if something breaks, so the managed service makes a lot of sense here. Similarly, recording with SIPREC is only practical if you have a SIPREC recorder already, but that is what that audience usually has already.

Scorecard

The Voice.AI ended up being a reasonable fit against the criterion I outlined in prior posts. Certainly, some of these requirements will matter more than others for your specific project.

| Requirement | Voice.AI Gateway support |

|---|---|

| Call Transfer | Yes – simple short code method |

| Recording | Yes, but only with external SIPREC systems |

| Playback interruption | Yes – some options to set programmatically |

| No activity detection | No |

| DTMF detection | Yes |

| SMS | No |

I have some other reviews planned in the near future. Make sure to subscribe so you don't miss anything!

EDITS

14-Jan, 2020: Updated pricing per AudioCodes guidances to include volume minimums without a managed service requirement in Pricing

About the Author

Chad Hart is an analyst and consultant with cwh.consulting, a product management, marketing, and strategy advisory helping to advance the communications industry. In addition, recently he co-authored a study on AI in RTC and helped to organize an event / YouTube series covering that topic.

Comments